Over the past five years, colleagues from the Centre for Development Impact (CDI) – a joint initiative between the Institute of Development Studies, Itad and University of East Anglia – have been innovating with and learning how to use Contribution Analysis as an overarching approach to impact evaluation. In this blog series, we share our learning and insights, some of them in raw emergent form, highlight the complexities, nuances, excitements, and challenges of embracing new ways of doing impact evaluation.

For regular updates on news, opinion and events, subscribe to our Centre for Development Impact newsletter.

Causal hotspots for learning and accountability

We begin this series by sharing ideas about ‘causal hotspots’ that first surfaced as ‘aha’ moments in our collaboration on the CDI short course Contribution Analysis for Impact Evaluation – the moments when we found the ways and words to better articulate our ideas and help people navigate the messy realities of theory-based evaluation.

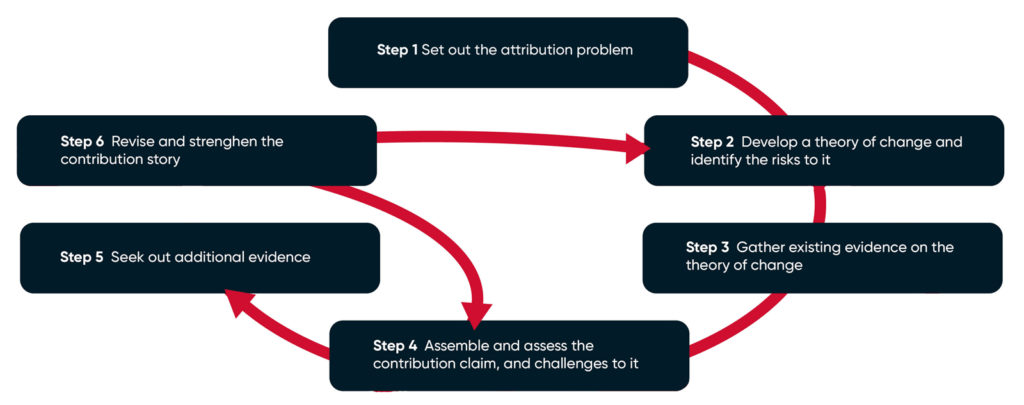

In the course, we introduce Contribution Analysis (CA) as an overarching approach to theory-based evaluation of outcomes and impact. CA is commonly applied through the use of Mayne’s six steps, which, while useful as a structuring device for critical thinking and sense-making, can in practice become somewhat recipe-like. The simplicity of a sequence of six steps is uncomfortably linear and contradicts our real-life experience of evaluation as an emergent learning-oriented and reflexive process. Through our practice, we have discovered the strength of CA as an iterative approach to reflection and refinement of theories of change (ToC) recasting the six steps into an iterative cycle (see Figure 1).

Figure 1. Iterative use of theories of change in contribution analysis

Source: Apgar, M., Hernandez, K., and Ton. G (2020) ‘Contribution Analysis for Adaptive Management’, ODI

The image above contains the following steps:

- Step 1: Set out the attribution problem

- Step 2: Develop a theory of change and identify the risks to it

- Step 3: Gather existing evidence on the theory of change

- Step 4: Assemble and assess the contribution claim and challenges to it

- Step 5: Seek out additional evidence

- Step 6: Revise and strengthen the contribution story. Then return to either step 2 or step 4.

Embracing ToC as a facilitated reflexive process to identify and forge impact pathways so that implementors become more effective in supporting social change – and commissioners can get more bang for their evaluation buck – should be a liberating experience! Moving beyond ToC as a tool to define what to evaluate at the outset, to ToC as the device around which people learn is exciting because it opens us up to plural, partial and contested views of how change happens, a trend we are pleased to see is growing in the sector (shown by Isabel Vogel’s influential review of ToC and a more recent review commissioned by the Swiss Development Cooperation, among others).

The jungle of causality

But liberation and excitement, as well as plurality, bring new challenges. As has been noted by Rick Davies a central tension in building ‘good’ ToCs is to keep them simple while acknowledging that reality is complex, which requires having a process in place to navigate, depict and work with them in such a way that revisiting ToCs actually generates meaningful and useful insights.

On the one hand, keeping it simple is critical for agreement and ongoing engagement with all stakeholders – imagine here the classic boxes and arrows diagrammatic form of a programme theory accompanied by a crisp narrative to communicate about the programme. While on the other hand, refining ToC at a level of detail that provides real and nuanced learning, especially for implementers – the ultimate experts in their own interventions – requires that we dig deeper. The ToC needs to be plausible, or robust as John Mayne puts it, and evaluable. It needs to be granular enough to support nuanced understanding of specific causal mechanisms, while helping to link to social science theories, and reflection on past interventions. This causal-complex and theory-heavy approach can quickly become a jungle of entangled arrows that may be useful for individual thinking but not for collective reflection, and even less for external communication.

Adding to the foliage in the jungle is the need to use ToC across scales and contexts – large programmes tend to have different intervention modalities applied in different contexts and affecting dynamics that work at different scales (e.g. macro-level national legislation, meso-level institutional strengthening, and micro-level experimentation, and even more micro behaviour change in individuals or groups). We have learned that it is possible to cater to these diverse needs, but it requires moving away from one all encompassing ‘jungle-like’ ToC to using multiple ToCs are interrelated in specific and identifiable ways. Each diagram can include one or more impact pathway that are ‘nested’ in a more abstract and ‘simple’ overarching whole. Tom Aston has recently written about this challenge and reflects on multiple approaches to unpacking ToC across scales including Mayne’s nested ToC approach, realist evaluation’s middle range theory and Mel Punton’s use of ladders of abstraction. It seems we are, as a community of practice, becoming more versed in ways to navigate multiple scales and contexts in theory-based evaluation.

Getting lost in the jungle

In theory-based evaluation, the better the ToC, the better the evaluation will be. And as we hone our skills with multiple ToCs – embracing the causal complex, theory-heavy and multi-scalar approach – the risk of getting lost in the jungle of causality is high.

The iterative use of CA steps can guide a process that is structured enough but not prescriptive. Iteration between step 2 – develop theory of change and asses the risks to it, and step 3 – gather existing evidence on the theory of change, is crucial precisely because of needing to unpack, repack, zoom in and zoom out to gradually build and refine the theory of change that will become plausible and evaluable. But the most important step to find a way in the forest is the reflection in step 4 – assemble and assess the contribution claim and key challenges to it. Here you must then decide specifically what part of the causal pathway holds the contribution claims that you will examine in more depth. And at this point it can become hard to see the wood for the trees!

Theory based evaluation requires that we make choices, it is near impossible that any evaluation would have the time and resources to examine every link in the chain. The critical question is – how do we decide where to focus? How much granularity is enough to design ‘rigorous’ evaluation? When have we gone too far in the detailing of processes in the ToC and are going down dark rabbit holes that are not essential? These are the questions we were asked by participants in our short course as we first pushed them into the jungle and guided their way through of it. It made us articulate the concept of ‘causal hotspot’ as a way out.

Finding the causal hotspots

There are two dictionary definitions of ‘hotspot’: (1) ‘a place where war is likely to happen’ and (2) ‘a popular or exciting place’ – both of these definitions help us explain the use of causal hotspots.

A causal hotspot is a place in the ToC where evidence is contested. In development interventions stakeholders often disagree about the relevance or effectiveness of activities or change processes, or – in research for development programmes – epistemological battles are ongoing (around approaches to evidence generation) and paradigmatic wars ensue around how evidence might, should and does support change. A causal hotspot is where there is most value in undertaking evaluation to contribute to theory and practice. It may also be where there is simply an obvious gap in evidence that evaluation research can fill and will maximise learning or accountability contributions.

A causal hotspot can also be the area in the ToC that is emphasized by one or more stakeholders in the evaluation. Donors might be most interested in a part of the ToC because learning about this particular aspect of the pathway of change is useful for influencing policy. Programme managers might focus on other areas that are central for future programming. Participants on the ground might question yet again other areas, especially the way that the support activities directly affect their lives and work. All of these could be relevant places to explore deeply.

The most powerful causal hotspots are ones that combine both aspects – where evidence is contested or lacking and where stakeholders see value in investing in evaluation. Step 4 of the CA generally leads to a wish list of causal hotspots – after which prioritisation is necessary. We recommend that having about three hotspots helps to cater to diverse audiences with additional evidence to check key challenges in the (nested) ToCs while respecting real-world constraints on resources.

Working with causal hotspots is a practical way to zoom in, unpack and design evaluation research around specific causal configurations and define specific evaluation questions and methods. In our next two blogs we provide two examples from our practice to bring the ideas to life.